Posts tagged with 'Brief Bio'

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

John von Neumann

Since Ada Lovelace's death, there's a pretty big lull in notable computer-related activity. World War II is the main catalyst for significant research and discovery, so that's why I'm skipping ahead to figures involved in that period. If you think there's someone worth mentioning that I've skipped over, please do so in the comments.

But now, I'm skipping ahead about a half of a century to 1903, which is when John von Neumann was born. He was born in Budapest, in the Austro-Hungarian Empire to wealthy parents. His father was a banker, and John Von Neumann's precociousness, especially in mathematics can be at least partially attributed this to his father's profession. Von Neumann tore through the educational system, and received a Ph.D. in mathematics when he was 22 years old.

Like Pascal, Leibniz, and Babbage, Von Neumann contributed to a wide variety of knowledge areas outside computing. Some of the high points of Von Neumann's work and contributions include:

He also worked on the Manhattan Project, and thus helped to end World War II in the Pacific. He was present for the very first atomic bomb test. After the war, he went on to work on hydrogen bombs and ICBMs. He applied game theory to nuclear war, and is credited with the strategy of Mutually Assured Destruction.

His work on the hyrdogen bomb is where Von Neumman enteres the picture as a founding father of computing. He introduced the stored-program concept, which would come to be known as the Von Neumann architecture.

Von Neumann became Commissioner of the United States Atomic Energy Program. Here's a video of Von Neumann, while in that role, advocating for more training and education in computing.

In 1955, Von Neumann was diagnosed with some form of cancer, possibly related to his exposure to radiation at the first nuclear test. Von Neumann died in 1957, and is buried in Princeton Cemetary (New Jersey).

I encourage you to read more about him in John Von Neumann: The Scientific Genius who Pionered the Modern Computer, Game Theory, Nuclear Deterrence, and Much More (which looks to be entirely accessible on Google Books).

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

Ada Lovelace

Augusta Ada Byron, born in 1815 was not allowed to see a picture of her father, the Romantic poet Lord (George Gordon) Byron, until she became 20 years old. Her father never had a relationship with her. Her mother was distant as well, and even referred to Ada once as "it" instead of "her". In addition to these hurdles, Ada also suffered from vision-limiting headaches and paralyzing measles. However, if it weren't for these sicknesses, she might not be such a significant figure in the history of computing. Because she was part of the aristocracy and had access to books and tutors, she was able to learn about mathematics and technology even while sick, and picked up an interest in them.

She met Babbage in 1833 through a mutual friend. Because of her interest in technology and mathematics, she was tremendously interested in Babbage's Difference Engine. She became friends with Babbage--visiting and corresponding often.

Ada was married in 1835 and became a Baroness. After the birth of her first child, Annabella, she again suffered an illness for months. In 1838, her husband became the Earl of Lovelace. She became the Countess of Lovelace, which leads to her most commonly used moniker, "Ada Lovelace".

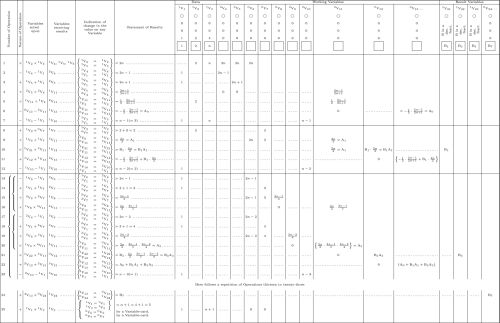

Starting in 1842, she was translating papers by Luigi Menabrea, who was writing about Babbage's proposal of the Analytical Engine. In one of these papers, Sketch of The Analytical Engine, Invented by Charles Babbage, there is a "note" or "appendix" called "Note G". This note is what many people point to as the first computer program. Not only is it the first program, it also demonstrates the core concept of the Analytical Engine: it's a more general purpose machine than any of the adding machines that have come before it. It shows that the machine would be capable of branching, addressing memory, and therefore processing an algorithm.

Paul Sinnett used Ada's algorithm to create a Scratch program, that you can try out and use to visualize the algorithm (just click "See Inside").

There is something of a controversy/dispute about Ada and this program. It's been argued that Babbage prepared the bulk of these notes for her, and therefore her contributions are not as significant. It's also argued that Babbage had already written several "programs" (albeit much simpler programs) prior to the Bernoulli program in Note G. and therefore Babbage is the first programmer, not Ada.

A notable passage that Babbage wrote about the Bernoulli algorithm in regards to Ada:

"We discussed together the various illustrations that might be introduced: I suggested several, but the selection was entirely her own. So also was the algebraic working out of the different problems, except, indeed, that relating to the numbers of Bernouilli, which I had offered to do to save Lady Lovelace the trouble. This she sent back to me for an amendment, having detected a grave mistake which I had made in the process."

So, at the very least, she created the first ever bug report. My judgement is that Ada is a significant colleague and collaborator of Babbage's. She was not just a translator or secretary; she contributed to his work in a significant and meaningful way. Babbage's work on the Analytical Engine probably wouldn't have gone nearly as far without Ada's help. Certainly she is just as worthy of celebration (every October there's an Ada Lovelace Day) as is Charles Babbage.

Ada and her husband were really into gambling. By 1851, she had started a gambling syndicate, and it's possible that she and her husband were trying to use mathematic models to get an edge on horse racing bookies. Seems like a great idea to me, given how advanced her knowledge of mathematics probably was compared to the majority of gamblers at the time; but apparenly the execution didn't work, and they ran up huge gambling debts. She died from cancer the next year at the tragically young age of 36, leaving behind three children. A short life, plagued by illness and family struggles and ended by cancer. But "the light that burns twice as bright burns half as long."

You may have also heard of the Ada programming language, which is named after her.

Ada is a language that was developed for the U.S. Department of Defense. It has an emphasis on safety, containing a number of compile-time and run-time checks to avoid errors that other languages would otherwise allow, including buffer overflows, deadlocks, misspellings, etc. At one point, it was thought that Ada would become a dominant language because of some of its unique features, but that has obviously not happened. However, it's not a dead language and lives on in specialty applications. It's #32 on the TIOBE index as of this writing, beating out Fortran, Haskell, Scala, Erlang, and many others.

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

Charles Babbage

Like Pascal, Leibniz, and even Muller, Babbage was a man who was into a whole variety of fields: mathematics, economics, engineering, and so on. He was born in 1791 in London, on the day after Christmas, just a couple weeks after the Bill of Rights was ratified in the States. His father was a banker; and again, like the Pascal family, it's not difficult to imagine a house cluttered with mathematics. An Oxford tutor educated him and he was accepted at the University of Cambridge, but found their mathematics program to be disappointing (as shown in this photo):

A theme that I've noticed while researching Babbage is that his motivation seems to be one of replacing people (or rather, the innaccuracies and nuisances they cause). If t-shirts existed during his life time, I'm sure he would have at least considered purchasing "Go Away Or I Will Replace You...". Babbage spent some time later on in his life measuring and campaigning against public nuisances, even to the point of unpopularity. Babbage said that such nuisances destroyed up to 25% of his productivity. He's not only the ancestor of computing, but he's also the ancestor of the BOFH.

In 1820, he helped to form the (Royal) Astronomical Society. I believe that this is not because he was a romantic stargazer, but rather it was mostly a way for him to explore ways to reduce errors in astronomy (heavily used in sea navigation) with computing. He began work in 1822 on his now famous Difference Engine. He won an award 2 years later for inventing this machine (yes, he won an award from the very same organization that he helped to start). His machine was never actually completed until 1991, when it performed its first calculation.

Later on, Babbage went to work designing the Analytical Engine, which was to be a more general-purpose machine (a multitasker, as compared to the unitaskers of Pascal, Leibniz, Muller, and his own Difference Engine). Again, this machine (actually a collection of machines) was never completed. However, the designs include the use of punch cards as input, as well as branching, looping, and sequencing. Theoretically, this machine would be the first to ever be Turing-complete, and therefore the first ever device equivalent to what would generally be considered a computer.

The Analytics Engine concept marks the birth of computers, and even more importantly, marks the beginning of software (as will be explored later in the Ada Lovelace bio). However, this separation of concerns was just too abstract for the Victorian world, and he never got any funding to build the machine. Babbage died in 1871, still tinkering with his idea until the bitter end.

Plan 28 is a project to actually build an Analytical Engine. John Graham-Cumming gave a TEDx Talk about The Greatest Machine That Never Was:

Check out about 12 minutes in to see a part of the Analytical Engine in action. To compare this machine apples-to-apples with current computers, John Graham-Cumming has said that it would have 675 bytes of memory and a clock speed of 7 hz. That's hertz, not megahertz. Still, I'd call that very impressive: the Atari 2600 only has 128 bytes of RAM (albeit a much, much faster clock speed, and it's not the size of a locomotive).

Babbage's legacy lives on as an icon of computers and computing. In addition to the multiple academic institutions that bear his name, there's a crater on the moon named after him. Do you remember the video game/electronics store "Babbage's"? Named after him.

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

J.H. Muller

Johann Helfrich von Muller, born in Germany in 1746 was the son of Lorenz Friedrich Muller, an architect and engineer.

There's scant sources of information on the internet available for Muller. It seems to be the case that he was a prolific inventor, engineering cool stuff like an immersive children's theater, a range finder, a barometer, and so on. Based on what little I can find, he seems to me very much in the same category as Thomas Edison: not really inventing or creating something brand new, but rather improving existing devices. He build a calculating machine which was an improved version of contemporary Philipp Hahn's machine, which in turn was based on Leibniz's stepped drum design.

His most notable invention is also the his most interesting in the context of computing: a difference engine. Muller's invention was described briefly in an appendix of a volume containing details of the calculating machine. This machine was only a proposal, and was never built. However, Charles Babbage would later go on to actually build a machine very much like the one described by J.H. Muller, and it's entirely possible that Babbage was at least partially inspired by Muller's work.

There is a book that was published in 1990: Glory and Failure: The Difference Engines of Johann Muller, Charles Babbage, and Georg and Edvard Sheutz. I suspect this book contains more informatiion about J.H. Muller than I could drum up, but it appears to be out of print, rather expensive, and lacking an ebook version. Unfortunately, that's beyond my budget for a single blog post, so J.H. Muller will remain mostly a footnote in the grander story of Charles Babbage for now.

Muller died in 1830.

Welcome to the latest installment of the Brief Bio series, where I'm writing up very informal biographies about major figures in the history of computers. Please take a look at the Brief Bio archive, and feel free to leave corrections and omissions in the comments.

Gottfried Wilhelm Leibniz

My series started with Blaise Pascal not because his contributions to computing were particulary significant. The Pascaline is pretty neat, but it's not programmable. Pascal contributed a lot to mathematics, science, and culture, but his effect on computing was relatively small compared to the next subject: Gottfried Wilhelm Leibniz. He was born when Pascal was 17 years old, but lived 41 years longer than the ailing Pascal. Both men were apparently fond of outrageous haircuts, which must have been quite trendy in late-Renaissance Europe.

Like Pascal, Leibniz had a huge effect on mathematics, science, and culture. If you've heard his name before, it's quite likely you heard in your college calculus class in the context of Leibniz's Notation. Leibniz actually invented calculus (he was a contemporary of Isaac Newton, but created it independently of him).

Leibniz also spent a lot of time working on mechnical calculators. With the Pascaline in mind, he invented the Leibniz wheel, which could be hypothetically used to construct a calculator that can also do multiplication. From 1672 to 1694, he worked on a Stepped Reckoner that used the Leibniz wheel. This machine actually had some flaws which required internal fiddling to correct. So relax: if Leibniz's product had bugs, then what hope do we have of shipping bugless software?

I couldn't find any video of a Stepped Reckoner (replica or otherwise), probably because it is a flawed device. But, I did find a video that shows how the Leibniz wheel works.

But Leibniz's contributions don't stop there. The binary number system was invented by Leibniz. Yeah, the binary number system that runs all the world's technology (which Leibniz predicted as well). He described (but didn't build) a system of marbles & punch cards that could be used to perform binary calculations (full original text of De progressione Dyadica [PDF]).

One quaint view of Leibniz's that I discovered was that he was optimistic about the eventual creation of a writing system/language that would solve all problems of ethics and metaphysics. "If we had such an universal tool, we could discuss the problems of the metaphysical or the questions of ethics in the same way as the problems and questions of mathematics or geometry...two philosophers could sit down at a table and just calculating, like two mathematicians, they could say, 'Let us check it up...'" I find this idea a bit naive, but ya gotta love the optimism.

Leibniz's beliefs about language, thought, and computing would become a very rudimentary prototype for later artificial intelligent research, and he's probably the reason some of my computer science courses were actually in the philosophy college.

After Leibniz's death in 1716, his legacy was in tatters. His philosophical beliefs were under heavy criticism, and his independent discovery of calculus was doubted, and many believed that he was simply trying to take credit for something that he didn't discover independently. Like so many historical figures, his short-term legacy would eventually be outpaced to the position of respect that he holds today.